Deadlock Detection Algorithm

Introduction

Deadlock occurs in computing when two or more processes are unable to continue because each is waiting for the other to release resources. The main concepts involved are mutual exclusion, resource holding, circular wait, and no preemption.

For example, imagine two trains approaching each other on a single track with no possibility to pass. When they meet, neither can move forward, effectively trapping both. This situation illustrates the concept of deadlock in a real-world scenario.

How Does Deadlock occur in the Operating System?

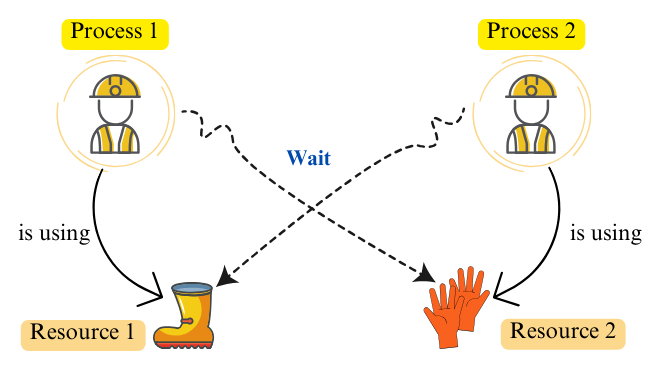

In operating systems, a deadlock occurs when two or more processes hold resources and simultaneously wait for resources held by each other. For instance, consider the following diagram: Process 1 holds Resource 1 and waits for Resource 2, which is held by Process 2. Meanwhile, Process 2 is waiting for Resource 1, creating a circular dependency.

Necessary Conditions for Deadlock in OS

Four Coffman Conditions

Deadlock can occur if all of the following four conditions are met simultaneously:

- Mutual Exclusion: Resources are non-shareable, meaning that only one process can use a resource at any given time.

- Hold and Wait: A process is currently holding at least one resource while waiting to acquire additional resources.

- No Preemption: Resources cannot be forcibly taken from a process; they must be voluntarily released by the process holding them.

- Circular Wait: A set of processes are each waiting for a resource held by another process in a circular chain.

What is Deadlock Detection?

Deadlock detection is the process used by a system to identify if any set of processes is stuck in a state of indefinite waiting, where each process is waiting for resources held by the others, thereby preventing progress. In simpler terms, it involves determining whether any processes are trapped in a circular wait.

Several algorithms are used for this purpose, including:

- Resource Allocation Graph

- Banker's Algorithm

Banker's Algorithm

About Banker's Algorithm

The Banker's Algorithm, developed by Edsger Dijkstra, is a resource allocation and deadlock avoidance algorithm used in operating systems to manage resources and ensure the system remains in a safe state.

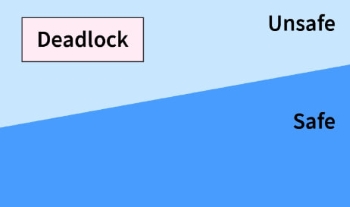

It prevents deadlock by allocating resources in a manner that avoids unsafe states and checks the system's state for safety before granting resource requests.

Data Structures Used:

Let 'n' be the number of processes in the system and 'm' be the number of resource types.

- Processes and Resources:

- Processes(n): These are the active entities in the system that require resources to perform tasks.

- Resources(m): These are the passive entities that processes need, such as CPU time, memory, or I/O devices.

- Maximum (Max):

- It is a 2-d array of size 'n*m' that defines the maximum demand of each process in a system.

- Max[i, j] = k means process Pi may request at most 'k' instances of resource type Rj.

- Allocation:

- It is a 2-d array of size 'n*m' that defines the number of resources of each type currently allocated to each process.

- Allocation[i, j] = k means process Pi is currently allocated 'k' instances of resource type Rj

- Available:

- It is a 1-d array of size 'm' indicating the number of available resources of each type.

- Available[j] = k means there are 'k' instances of resource type Rj

- Need:

- It is a 2-d array of size 'n*m' that indicates the remaining resource need of each process.

- Need[i, j] = k means process Pi currently needs 'k' instances of resource type Rj

Advantages

- Allows more dynamic and flexible allocation of resources compared to prevention and avoidance strategies.

- Detects deadlocks as they occur, enabling timely intervention to resolve them.

- Maximizes resource utilization by not imposing strict constraints to prevent deadlocks.

Disadvantages

- Continuous monitoring and analysis of resource allocation can introduce computational overhead.

- Requires sophisticated mechanisms to accurately detect cycles or knots in resource allocation graphs.

- Delays in detection or resolution can impact system performance and user experience.

Applications

- Operating Systems: Essential for managing resources like memory, CPU, and I/O devices.

- Database Management Systems (DBMS): Critical for managing transaction concurrency and resource locking.

- Distributed Systems: Important for managing resources across multiple nodes in a network.